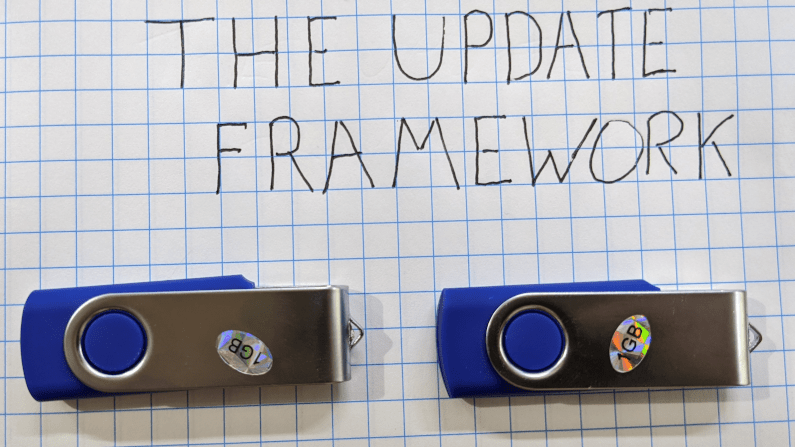

Software. You know it will need updating. Every consumer product you have ever bought the first thing it did was to install the “real” version. How do we securely update software? Let’s talk about The Update Framework.

Freshness is one of the battles in the software operations world. It seems like every piece of software requires loving hand feeding and care for hourly updates. Wouldn’t it be great if software could just care for itself and stay up to date? What’s that you say? You are concerned about auto-updating software? How will that be secure? Well, pull up a chair and let me tell you the tale of The Update Framework, achieving the goal of auto-update and secure together at last.

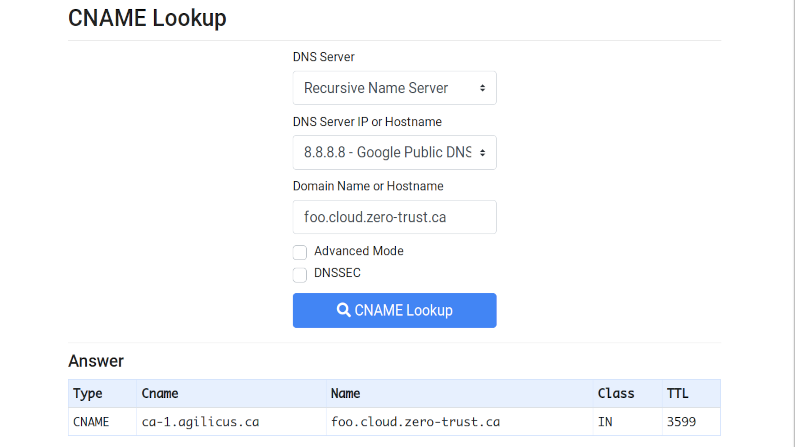

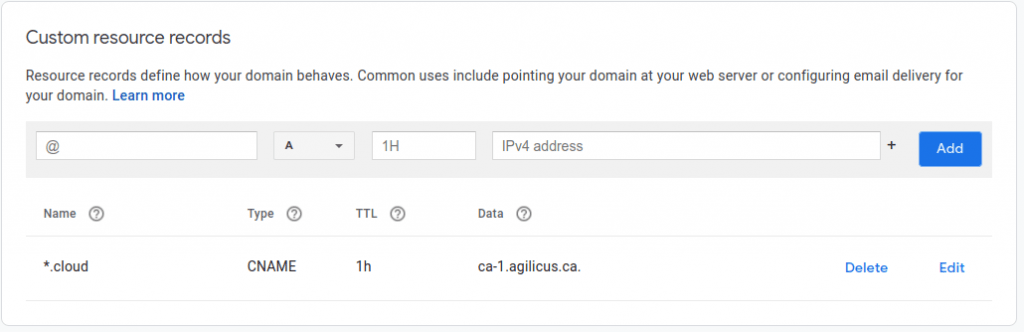

Nearly all of our software is run in our cloud, by us. We use Dependabot and our CI and CD to keep it fresh. But one of our Features is a connector that runs on your site. And, we want that to be zero cost to you to keep up to date, safely, securely.

The Update Framework is a CNCF project. It was originally envisaged for deeply embedded systems such as Automobiles (you can see Uptane). In a nutshell you create a set of signing keys:

- root key: used to sign and rotate the other keys

- target key: used to sign a new binary (target)

- snapshot key: used to sign the set of binaries, e.g. “this is version 3, use these 9 files together”.

- refresh key: used to show the repo itself is being updated

In your software you ship to your customer site you put a library (there are implementations for several languages of The Update Framework). This periodically polls your online repo. If it finds there are updates, it pulls and runs them.

For security the signing keys make sure that the clients at the customer site will ignore any tampering. But, to make this secure, you need to segregate and control those signing keys above. And this is where it gets complex.

First, the root key, you want this offline only. You want to only access this once a year or so, when you rotate the other keys. It should be nearly impossible for a bad-actor to get it. I choose to cipher it with a multi-word passphrase. The passphrase I used said “Approximate Crack Time: 1,482,947,715,526,880,400 centuries”, so, well, that probably won’t be the method they get me. The key itself is strong (perhaps not quantum proof, but certainly strong enough). So the private key, ciphered w/ that passphrase, now we need to protect it. For this I have chosen not to use a Hardware Security Module (HSM). Instead, I’ve placed it on a pair of USB keys which I will place in secure locations.

Now, use the root key to create the target/snapshot/refresh key. These are placed into Google Secret Manager, and then we use IAM to indicate who can access these, and when. This provides my audit trail.

Our CI is taught to run a daily refresh job, it will handle the refresh job, we’ll deal with the target updates manually, when we decide what to publish.

All in all, not too complex. Using the update framework we have obtained high integrity update chain, fully automatic, low effort for customer, low effort for us, low risk of tampering.