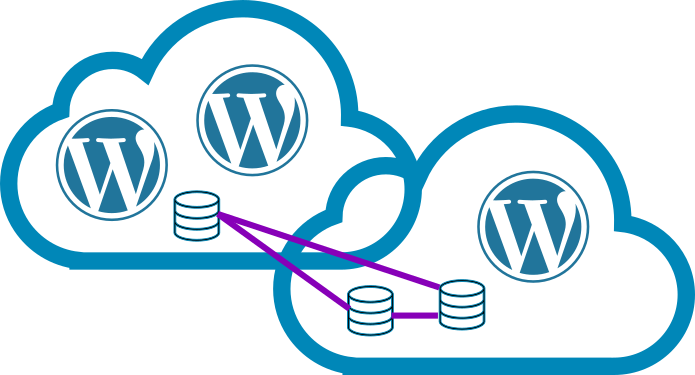

Like many of you we use an indeterminate number of dockerhub container pulls per hour. The base layers of something? The CI? Bits and bobs inside our GKE? One area of particular concern: we host applications from our customers, what base layers do they use?

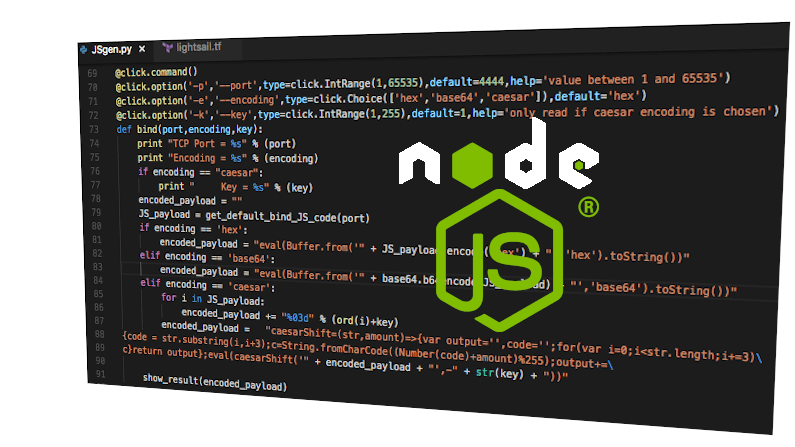

Our general strategy is to not use upstream containers, to build our own. For security. For control. But, the exceptions are, for well-known projects with strong security cultures. E.g. ‘FROM ubuntu:20.04‘ is allowed, ‘FROM myname‘ is not.

We run our own image registry, it authenticates (via JWT) to our Gitlab. It works very well with our CI, with our production systems. So our use of the Dockerhub registry is probably minimal. But its hard to say, we run a lot of CI jobs, we run pre-emptible nodes in GKE, they can(and do) restart often. So we have some risk with the new Rate-Limits announced.

GKE has a mirror of some images, and we do use that (both with a command-line to kubelet on the nodes, but also by injected into our CI runners in case they still use dind). Recently gitlab added some documentation on using registry mirror with dind. We inject this environment variable into the runner services just-in-case.

DOCKER_OPTS: "--registry-mirror=https://mirror.gcr.io --registry-mirror=https://dockerhub-cache.agilicus.ca"Now its time to bring up a cache. First I create an account in Dockerhub. This gets us 200/6hr limit. Then, I create a pull-through cache. You can see the below code in our github repo, but, first a Dockerfile. I create a non-root-user and run as that. Effectively this gives us an upstream-built registry, in our own registry, with a non-root user.

FROM registry

ENV REGISTRY_PROXY_REMOTEURL="https://registry-1.docker.io"

RUN : \

&& adduser --disabled-password --gecos '' web

USER webAnd now for the wall of YAML. This is running in my older cluster which does not have Istio, the one that just exists to host our gitlab etc. I use my trick to share an nginx-ingress while using a different namespace, reducing the need for public IPv4. I give this a 64GiB PVC, a unique name (dhub-cache), I set some environment variables. And we let it fly.

---

apiVersion: networking.k8s.io/v1beta1

kind: Ingress

metadata:

name: dhub-cache

namespace: default

annotations:

kubernetes.io/ingress.class: nginx

fluentbit.io/parser: nginx

kubernetes.io/tls-acme: "true"

certmanager.k8s.io/cluster-issuer: letsencrypt-prod

spec:

tls:

- hosts:

- "dhub-cache.MYDOMAIN.ca"

secretName: dhub-cache-tls

rules:

- host: "dhub-cache.MYDOMAIN.ca"

http:

paths:

- path: /

backend:

serviceName: dhub-cache-ext

servicePort: 5000

---

kind: Service

apiVersion: v1

metadata:

name: dhub-cache-ext

namespace: default

spec:

type: ExternalName

externalName: dhub-cache.dhub-cache.svc.cluster.local

ports:

- port: 5000

---

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: dhub-cache

namespace: dhub-cache

spec:

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 64Gi

---

apiVersion: v1

kind: ServiceAccount

metadata:

name: dhub-cache

namespace: dhub-cache

automountServiceAccountToken: false

---

apiVersion: apps/v1

kind: Deployment

metadata:

namespace: dhub-cache

name: dhub-cache

labels:

name: dhub-cache

spec:

replicas: 1

selector:

matchLabels:

name: dhub-cache

template:

metadata:

labels:

name: dhub-cache

spec:

securityContext:

runAsUser: 1000

runAsGroup: 1000

fsGroup: 1000

serviceAccountName: dhub-cache

automountServiceAccountToken: false

imagePullSecrets:

- name: regcred

containers:

- image: MYREGISTRY/dhub-cache

name: dhub-cache

imagePullPolicy: Always

securityContext:

allowPrivilegeEscalation: false

readOnlyRootFilesystem: true

ports:

- name: http

containerPort: 5000

livenessProbe:

httpGet:

path: /

port: 5000

periodSeconds: 30

timeoutSeconds: 4

failureThreshold: 4

readinessProbe:

httpGet:

path: /

port: 5000

periodSeconds: 20

timeoutSeconds: 4

failureThreshold: 4

env:

- name: REGISTRY_PROXY_REMOTEURL

value: "https://registry-1.docker.io"

- name: REGISTRY_PROXY_USERNAME

value: "MY_USER"

- name: REGISTRY_PROXY_PASSWORD

value: "MY_USER_TOKEN"

volumeMounts:

- mountPath: /var/lib/registry

name: dhub-cache

volumes:

- name: dhub-cache

persistentVolumeClaim:

claimName: dhub-cache

---

apiVersion: v1

kind: Service

metadata:

namespace: dhub-cache

name: dhub-cache

labels:

name: dhub-cache

spec:

type: NodePort

ports:

- port: 5000

targetPort: 5000

selector:

name: dhub-cache

---

apiVersion: v1

data:

.dockerconfigjson: MYINTERNALREGISTRYINFO

kind: Secret

metadata:

name: regcred

namespace: dhub-cache

type: kubernetes.io/dockerconfigjson