Information exposure. Many servers send a helpful banner out with the specific name and version of the software. This can in turn attract low-level attacks that use tools like Shodan.io to find vulnerable hosts. CWE-200 suggests we need to remove the information exposure. Let’s discuss.

Some hold that hiding these banners increases security. For example, CWE-200 has this position. Others (myself included) are of the opinion that security through obscurity gives a false sense.

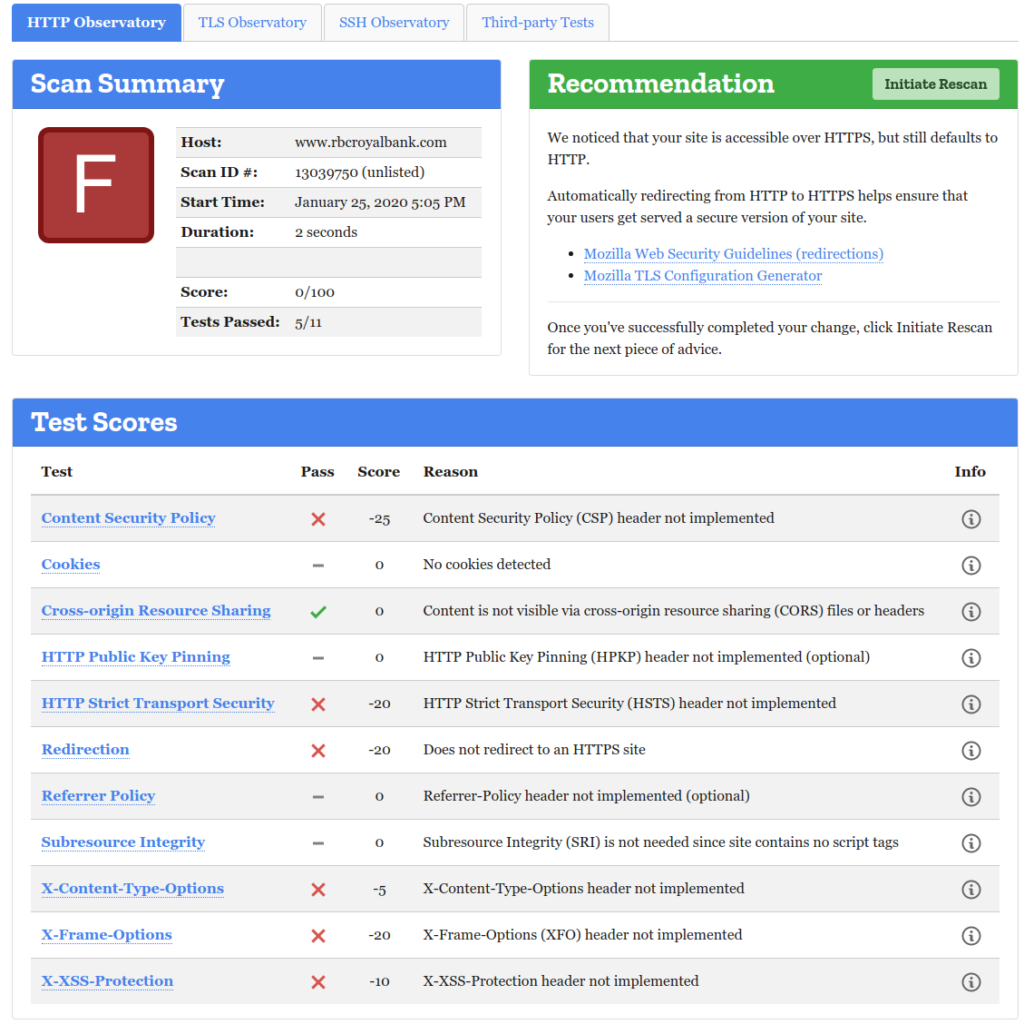

Regardless of your opinion, you will fail parts of your security audit with the banners in place. And that is enough reason to remove them. Let’s discuss how to do this with nginx when it is used for the sole-purpose of a 301-redirect. Why would its sole purpose be 301 redirect? Because we allow nothing on HTTP (unencrypted), and want a navigation aid for people who end up there the first time (before their browser sees the HTTP-Strict-Transport-Security header, HSTS).

Sadly, nginx by default will have two pieces of information exposure to remove: the banner as a header, and the name in the payload of the 301 response.

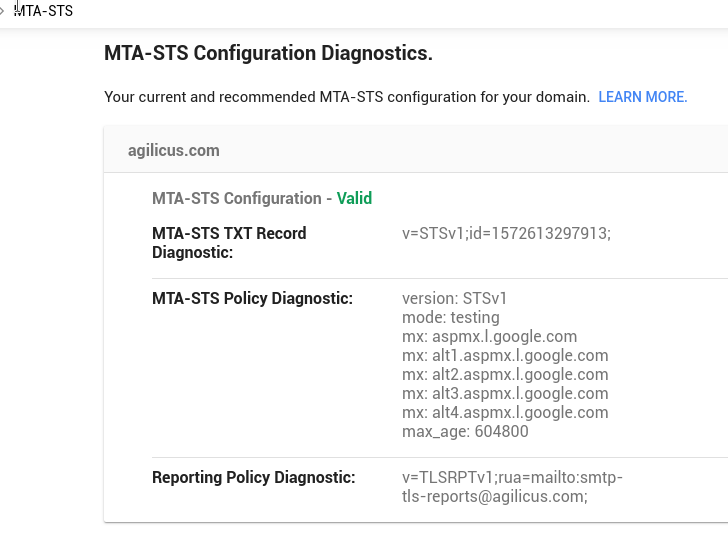

Side note: in “We are all in on the HSTS preload” I wrote about how we added our domains to the browser-distributed preload list. I encourage you to do the same.

OK, back? All preloaded? Good. You won’t forget again and let some unencrypted service go live! Let’s examine the config. I’ll dump it all below, and then discuss after (yes its a bit of a mouthful to pronounce!)

load_module modules/ngx_http_headers_more_filter_module.so;

worker_processes 1;

error_log /dev/stderr warn;

pid /tmp/pid;

events {

worker_connections 1024;

}

http {

include /etc/nginx/mime.types;

default_type application/octet-stream;

more_clear_headers Server;

map $http_user_agent $excluded_ua {

~kube-probe 0;

default 1;

}

log_format json escape=json '{ "time": "$time_iso8601", "remote_addr": "$proxy_protocol_addr",'

'"x-forward-for": "$proxy_add_x_forwarded_for", "request_id": "$request", "remote_user": '

'"$remote_user", "bytes_sent": $bytes_sent, "request_time": $request_time, "status": '

'$status, "vhost": "$host", "request_proto": "$server_protocol", "path": "$uri", '

'"request_query": "$args", "request_length": $request_length, "duration": $request_time, '

'"method": "$request_method", "http_referrer": "$http_referer", "http_user_agent": '

'"$http_user_agent" }';

access_log /dev/stdout json if=$excluded_ua;

server_tokens off;

keepalive_timeout 65;

server {

error_log /dev/stderr;

location /healthz {

access_log off;

return 200 "OK\n";

}

listen 8080 default_server;

server_name _;

server_tokens off;

error_page 301 400 401 402 403 404 500 501 502 503 504 /301.html;

# The 200 will be modified by the later return 301;

location = /301.html {

internal;

return 200 "";

}

location / {

return 301 https://$host$request_uri;

}

}

}

OK, that was a lot of text! But, in a nutshell, we are:

- setting logs to stdout/stderr (so we can run from CRI in Kubernetes)

- loading http_headers_more_filter_module to remove the nginx banner

- adding JSON log format (so it works better w/ fluent-bit)

- Squelching logs for kube-probe and /healthz

- adding custom 30x 40x and 50x pages with no body

- responding 301 redirect to https://path?params for all path?params

You’ll need a container w/ the nginx-mod-http-headers-more package loaded. It can be as simple as:

FROM alpine:3.10

LABEL maintainer="don@agilicus.com"

RUN apk update \

&& apk --no-cache add nginx-mod-http-headers-more \

&& touch /var/log/nginx/error.log \

&& chown nginx:nginx /var/log/nginx/error.log

That was easy! Now we just run this (as non-root, on non-port 80), with a redirect in via Kubernetes service from 80->non-privileged port. And boom, we have anonymous HTTP->HTTPS redirect. We have removed the information exposure.