Agilicus upgraded our web site infrastructure, and there was only one way to go: full Cloud Native. Cloud Native means many small components, stateless, scaling in and out, resilient as a whole rather than individually. As a consequence, we made design decisions for database and storage. Let’s talk about that!

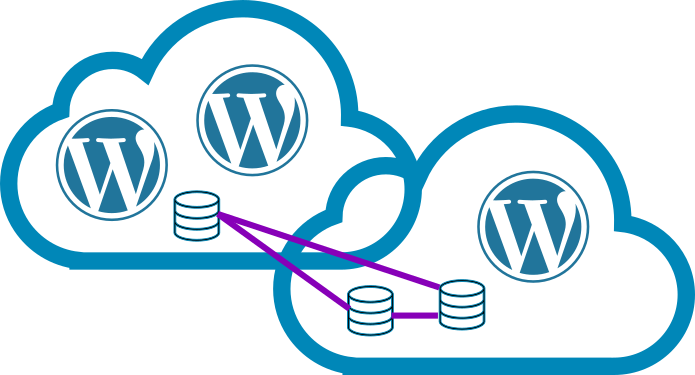

First, WordPress. It has been around for a long time. WordPress architecture dates to an era when ‘Cloud’ meant a virtual machine at best. You ran a single WordPress instance, a single database, and the storage was tightly coupled to the WordPress. Fancy folk used a fileserver with the storage, and 2 WordPress instances with shared storage. But, you always had a few infinitely reliable and scalable components to deal with (storage, database). Very few native WordPress installs run at high scale, most instead use either headless CMS outputing static files, or front-ended by CDN’s and a double dash of hope.

When I first started Agilicus I installed WordPress under Kubernetes (GKE), but only just. I had a cluster with non-preemptible nodes. I ran a mysql pod with a single PVC and a WordPress pod with a single PVC. Scale? Forgetaboutit. Resilience? Well, when the node went down, the web site went down.

Clean sheet. We counsel our customers to run on our platform, why not re-imagine our web site, our front-door, our public face on the same platform? Eat the delicious dogfood!

So, what does Dogfooding mean? It means no cheating. Remove all limitations. Make all the state scalable, cloud native. Simple single sign-on.

The architecture of WordPress has a few complexities for a Cloud Native world:

- Plugins have unlimited, direct access to database and filesystem

- User can install plugins from web front-end

- Content is hybrid local-file and database

- Plugins modify content (e.g. scale images, compress css)

- Database must be Mysql, its hard coded in everywhere

- Login infrastructure designed for local storage in database

OK, I got this. Let’s bring in a few tools to help. First, the database. For this we will use tidb. It presents a Mysql facade, is built on tikv which in turn is based on the Raft consensus algorithm. Raft is quite magic, and powers many Cloud Native components (etcd, cockroachdb, …). The Raft algorithm allows individual members to be unreliable, to come and go, but to have the overall set be consistent, reliable. Its bulletproof.

To deploy tidb we will use an Operator, allowing us to scale the database up and down, upgrade it. Now we can upgrade the database without any running impact, add capacity. Brilliant!

Now that the database is solved, in a Kubernetes, Cloud-Native way, on to the storage. This is considerably tougher, there is no Read-Write-Many storage in Google GKE. So, what can we do? I considered using Glusterfs. I’ve previously tried NFS. Terrible. Turns out there is a plugin for WordPress called wp-stateless. It causes all the images etc to be stored into Google Cloud Storage (GCS), and thus be accessible to all the other Pods (and the end user). Solved!

Moving on, the plugin issue. For this I built my own WordPress container, it pre-installs (each time it boots) the known set of plugins. Then, it looks in the database for ones which were active, and re-installs those. Thus each time the container boots it forces the local filesystem into sync before coming online. Same approach used for the theme. Onwards!

OK, the login. For this I wrote an OpenID Connect plugin that interacts with our Platform, bringing the roles forward. This means we have a seamless single-sign-on, secure. It logs in against accounts in our Platform which in turn are federated to upstream identity providers (G Suite in our case). Done!

Last thing I wanted to accomplish, rather than the user see https://storage.googleapis.com/bucket/path for the images, I wanted them to be local (even though they are not). Now, we could make a CNAME and do this with Google Cloud Storage, but that doesn’t work with SSL/TLS. Since I have added our domain to the pre-load list for HSTS, we have no choice (as if I did anyway) but to use encryption. Instead, I added a simple config into our nginx, below:

upstream assets {

keepalive 30;

server storage.googleapis.com:443;

}

location ^~ /www {

proxy_set_header Host storage.googleapis.com;

proxy_pass https://assets/bucket$uri;

proxy_http_version 1.1;

proxy_set_header Connection "";

proxy_hide_header alt-svc;

proxy_hide_header X-GUploader-UploadID;

proxy_hide_header alternate-protocol;

proxy_hide_header x-goog-hash;

proxy_hide_header x-goog-generation;

proxy_hide_header x-goog-metageneration;

proxy_hide_header x-goog-stored-content-encoding;

proxy_hide_header x-goog-stored-content-length;

proxy_hide_header x-goog-storage-class;

proxy_hide_header x-xss-protection;

proxy_hide_header accept-ranges;

proxy_hide_header Set-Cookie;

proxy_ignore_headers Set-Cookie;

}

The first block causes the nginx to know how to reach Google storage. The 2nd block, I used a prefix, /www/* is the static images from wp-stateless, and I forward them to my bucket in GCS. The proxy_hide_header are not really required, but they reduce the bandwidth to the end-user, so are helpful.

OK, so what have we achieved? We now have WordPress, fully stateless, running under Kubernetes. We can scale them in and out, load balanced and health-checked by our Istio service mesh. We have our Identity-Aware Web Application Firewall ensuring security. We have simple single sign on. We scale the database and storage as needed, resiliently, scalably, reliably.

All to deliver you, the people, these pithy notes. So, remember to subscribe (bell in the lower right) or via email.